M5Stack has introduced the LLM-8850 expansion card, a compact AI acceleration module built on the M.2 M-Key 2242 standard. At its core is the Axera AX8850 SoC, delivering up to 24 TOPS of INT8 performance. Designed for versatility, the module can slot into a wide range of hosts, including the Raspberry Pi 5, Rockchip RK3588-based SBCs, and x86 mini-PCs with an available M.2 M-Key interface.

The card integrates 8GB of LPDDR4x RAM and 32Mbit of SPI NOR flash, along with a capable video subsystem. It supports 8Kp30 H.264/H.265 video encoding and 8Kp60 decoding, with the ability to process up to 16 simultaneous 1080p streams. To maintain stability under load, the board is equipped with active cooling—a small turbine fan paired with a CNC-milled aluminum heatsink—preventing thermal throttling.

M5Stack LLM-8850 — Specifications & Feature Summary

Ready-to-drop-in M.2 M-Key AI acceleration module powered by the Axera AX8850. Includes a concise spec table and feature list for documentation or product pages.

| SoC | Axera AX8850 |

|---|---|

| CPU | 8 × Cortex-A55, up to 1.7 GHz |

| NPU | 24 TOPS (INT8) |

| VPU — Encode | H.264 / H.265, up to 8K @30fps; scaling & cropping |

| VPU — Decode | H.264 / H.265, up to 8K @60fps; up to 16× 1080p concurrent streams; scaling & cropping |

| Memory | 8GB LPDDR4x, 64-bit, 4266 Mbps |

| Storage | 32 Mbit QSPI NOR (bootloader only) |

| Host Interface | M.2 M-Key (2242), PCIe 2.0 ×2 |

| Cooling | Turbine fan + CNC aluminum heatsink |

| Power | 3.3V via M.2 connector; < 7 W max |

| Dimensions | 42.6 × 24.0 × 9.7 mm |

| Weight | 14.7 g |

| Operating Temp. | 0–60 °C (sustained load ~70 °C at room temp) |

| OS Support | Ubuntu 20.04 / 22.04 / 24.04, Debian 12 (Linux only; driver: axcl-smi) |

| Typical Applications | LLM inference, vision processing, multimodal and audio models |

| MSRP / Channels | $99 — M5Stack store, AliExpress |

Software Support and Compatibility

The LLM-8850 runs exclusively on Linux. It supports Ubuntu 20.04 / 22.04 / 24.04 and Debian 12, but does not currently support Windows, macOS, or even WSL. This is because its axcl-smi driver is only available for Linux environments.

Once the driver is installed on a Raspberry Pi 5, Linux SBC, or mini-PC, developers can access demo programs and model packages via the official wiki. Supported models cover a broad range of AI tasks:

- Vision: YOLO11, Yolo-World-V2, Yolov7-face, Depth-Anything-V2, MixFormer-V2, Real-ESRGAN, Super-Resolution, RIFE

- Large Language Models (LLMs): Qwen3-0.6B, Qwen3-1.7B, Qwen2.5-0.5B-Instruct, Qwen2.5-1.5B-Instruct, DeepSeek-R1-Distill-Qwen-1.5B, MiniCPM4-0.5B

- Multimodal: InternVL3-1B, Qwen2.5-VL-3B-Instruct, SmolVLM2-500M-Video-Instruct, LibCLIP

- Audio: Whisper, MeloTTS, SenseVoice, CosyVoice2, 3D-Speaker-MT

- Generative: lcm-lora-sdv1-5, SD1.5-LLM8850, LivePortrait

Using the LLM-8850 with Raspberry Pi 5

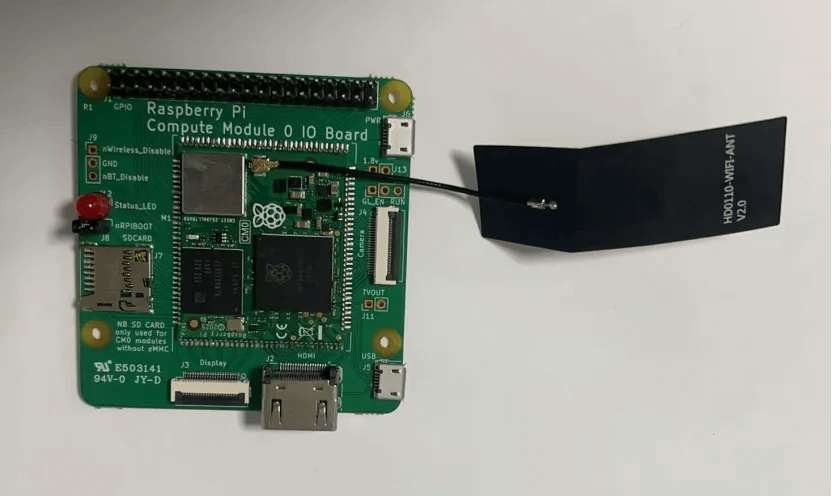

To connect the LLM-8850 to a Raspberry Pi 5, M5Stack provides an M.2 HAT+ M-Key adapter board, enabling stable PCIe communication between the two.

Although official benchmark results are not yet available, early data from the wiki indicates, for example, that the Qwen3-0.6B model can reach 12.88 tokens/s with w8a16 quantization.

From a raw performance perspective, the LLM-8850 is competitive with other edge AI modules, such as the 26 TOPS Hailo-8. While Hailo-8 excels in computer vision workloads, the AX8850 shows stronger efficiency in large language model inference, giving the LLM-8850 an edge in certain AI domains.

In terms of pricing, the LLM-8850 sits in the same bracket as most AI accelerator modules. At $99, it is more affordable than the Raspberry Pi AI HAT+ (26 TOPS, $110) and significantly undercuts the Hailo-8 M.2 expansion card (~$200).

The module is available through two main channels:

- AliExpress (Alibaba International)

- M5Stack’s official online store

Both list the card at $99.

Like it? Share it: